In Experiment 1, participants learned the locations of objects (buildings or related accessories) in an exterior environment and then learned the locations of objects inside one of the centrally located buildings (interior environment). Two experiments investigated the mental representations of objects’ location in a virtual nested environment.

Furthermore, our preliminary neuroimaging and behavioral results suggest that the training activates brain circuits involved in higher-order mechanisms of information encoding, triggering the activation of broader cognitive processes and reducing the working load on memory circuits (Experiments 2 and 3). Experiments 2 and 3 confirmed the behavioral results of Experiment 1. Results show that our virtual training enhances the ability to form allocentric representations and spatial memory (Experiment 1). Furthermore, to uncover the neural mechanisms underlying the observed effects, we performed a preliminary fMRI investigation before and after the training with MindTheCity!. We verified whether playing at MindTheCity! enhanced the performance on spatial representational tasks (pointing to a specific location in space) and on a spatial memory test (asking participant to remember the location of specific objects). With this aim, we used a novel 3D videogame ( MindTheCity! ), focused on the navigation of a virtual town. In the experiments, we investigated whether virtual navigation stimulates the ability to form spatial allocentric representations.

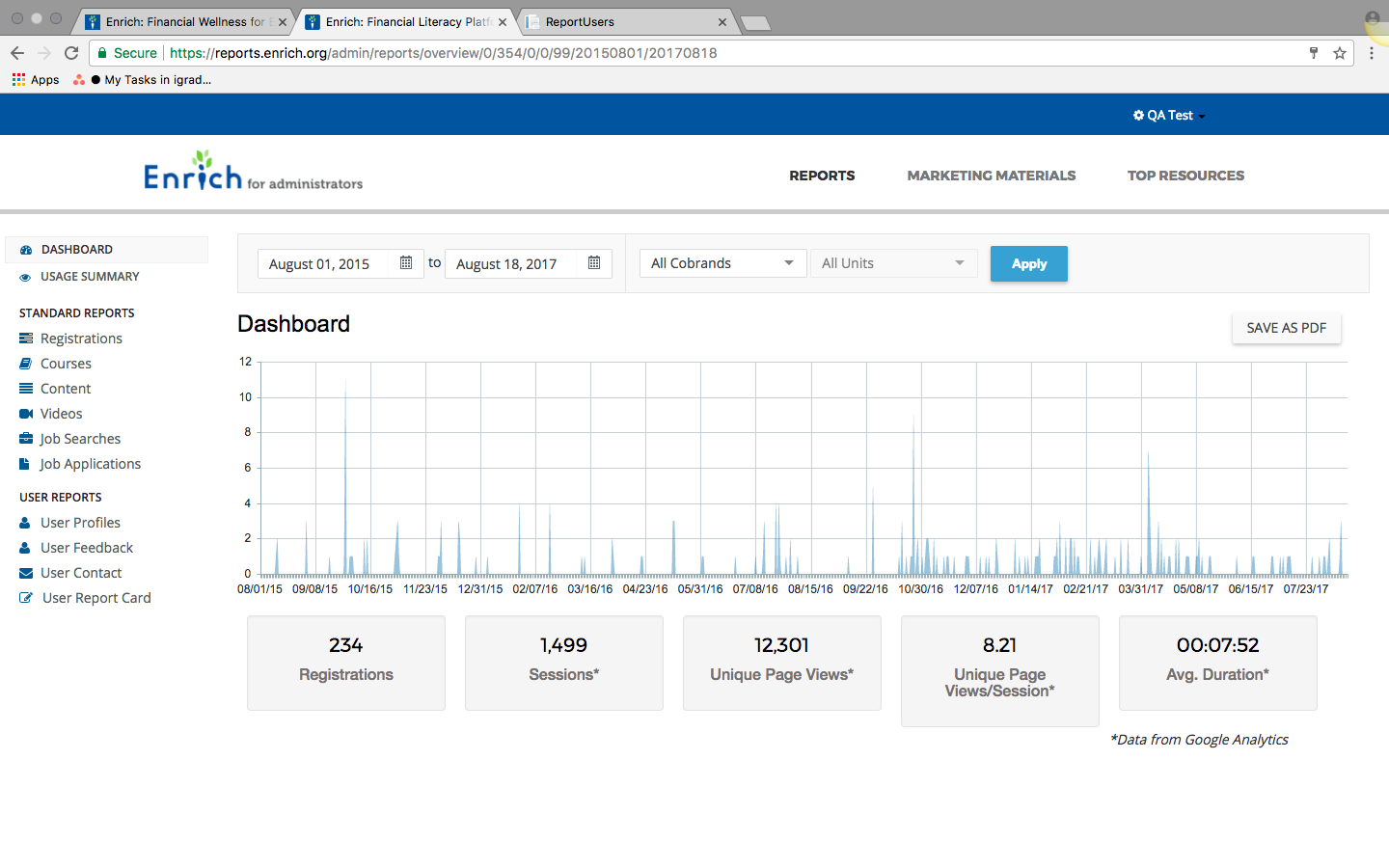

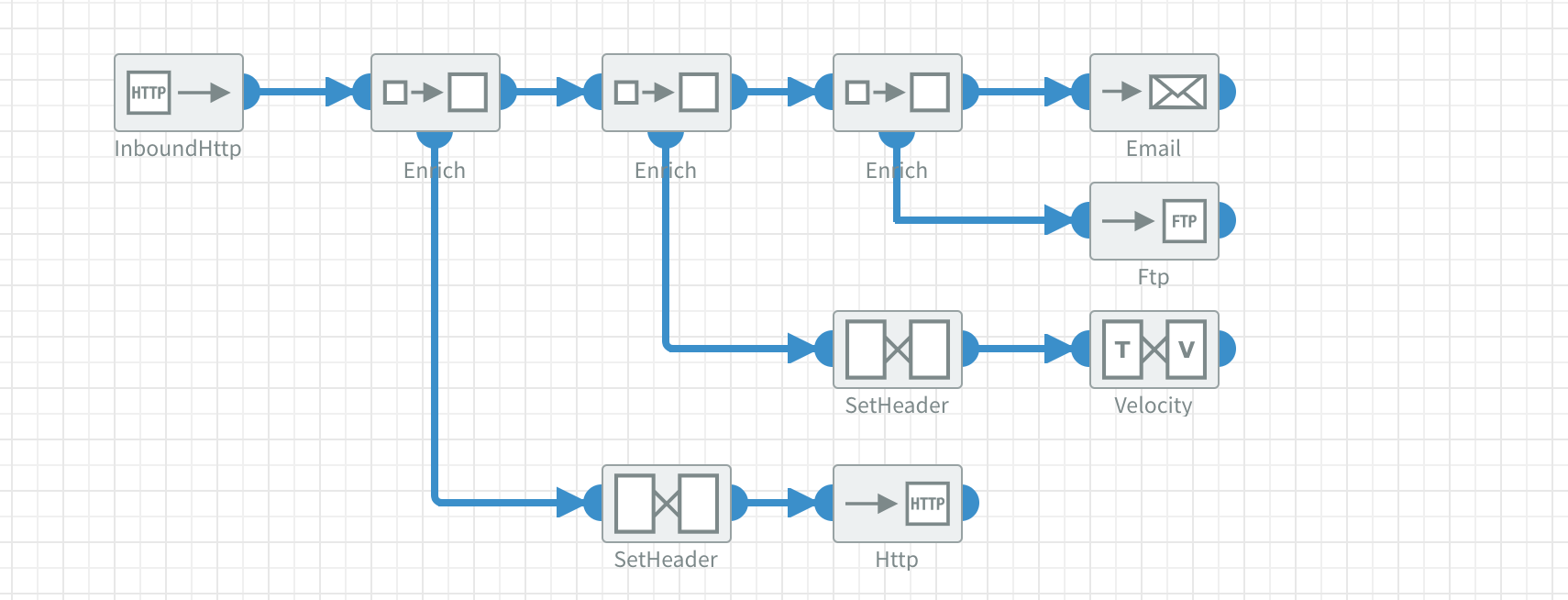

Enrich testview series#

Here, we present a series of three different experiments: Experiment 1, Discovery sample (23 young male participants) Experiment 2, Neuroimaging and replicating sample (23 young male participants) and Experiment 3 (14 young male participants). Our findings do not only provide new experimental evidence for the development of spatial knowledge, but also establish a new methodology to identify and assess the function of landmarks in spatial navigation based on eye tracking data.Īllocentric space representations demonstrated to be crucial to improve visuo-spatial skills, pivotal in every-day life activities and for the development and maintenance of other cognitive abilities, such as memory and reasoning. Applying the rich club coefficient, we found that these gaze-graph-defined landmarks were preferentially connected to each other and that participants spend the majority of their experiment time in areas where at least two of those houses were visible. As these high node degree houses fulfilled several characteristics of landmarks, we named them "gaze-graph-defined landmarks". The importance of these houses was supported by the hierarchy index, which showed a clear hierarchical structure of the gaze graphs. Our results revealed that 10 houses had a node degree that exceeded consistently two-sigma distance from the mean node degree of all other houses. To investigate the importance of houses in the city, we applied the node degree centrality measure. Applying graph partitioning, we found that our virtual environment could be treated as one coherent city. On these, we applied graph-theoretical measures to reveal the underlying structure of visual attention.

To extract what participants looked at, we defined "gaze" events, from which we created gaze graphs.

The analysis is based on eye tracking data of 20 participants, who freely explored the virtual city Seahaven for 90 minutes with an immersive VR headset with an inbuild eye tracker. Here, we propose a method that allows one to quantify characteristics of visual behavior by using graph-theoretical measures to abstract eye tracking data recorded in a 3D virtual urban environment. Recent technical advances allow new options to conduct more naturalistic experiments in virtual reality (VR) while additionally gathering data of the viewing behavior with eye tracking investigations. Vision provides the most important sensory information for spatial navigation.

0 kommentar(er)

0 kommentar(er)